People have engaged with smart speakers for some years now, so it’s commonplace for people to ask Alexa, Siri and the like for the weather forecast, mathematical calculations and other information – and this robotically voiced-back material has become both broader and more exact in this era of artificial intelligence (AI). But suppose the information is instead coming from anthropomorphic, or humanlike, characters in an office or boardroom. What are the advantages and disadvantages, and what are the workplace implications?

These issues are explored in research co-authored by Professor Jochen Menges, who observes that little was previously known about how employees emotionally navigate relationships with AI agents displaying distinct personality traits. This could increasingly be the case going forward, as some foresee workplaces that naturally blend the real world with virtual augmentations.

From Alexa to the boardroom: the rise of anthropomorphic AI at work

“The practical implications of this research for AI designers and workplace managers is that it’s really important to think about user emotions when developing human-centred AI agents,” says Jochen, who is Professor of Leadership at Cambridge Judge Business School and at the University of Zurich where he runs the Center for Leadership in the Future of Work.

“Early chatbots had a robotic-like voice that varies little from answer to answer. But our study highlights that more modern anthropomorphic features such as voice intonation, conversational style and appearance are important in strengthening users’ positive emotions as they interact with AI, but there are also drawbacks from poor design or implementation.”

As the research concludes, these design approaches should “foster, rather than hinder, employee engagement, ensuring AI contributes positively to corporate environments”. This is especially important if the workplace evolves, as some envision, with individuals working alongside autonomous AI agents that perform key roles within the organisation.

The study – entitled “When AI gets personal: employee emotional responses to anthropomorphic AI agents in a virtual workspace” – is co-authored by Anand van Zelderen of SKEMA Business School, Sinuo Wu of University of South-Eastern Norway, Gergely Koszo of University of St Gallen and Jochen Menges of University of Zurich and Cambridge Judge Business School.

Testing AI agents: from text-based virtual displays to humanlike colleagues

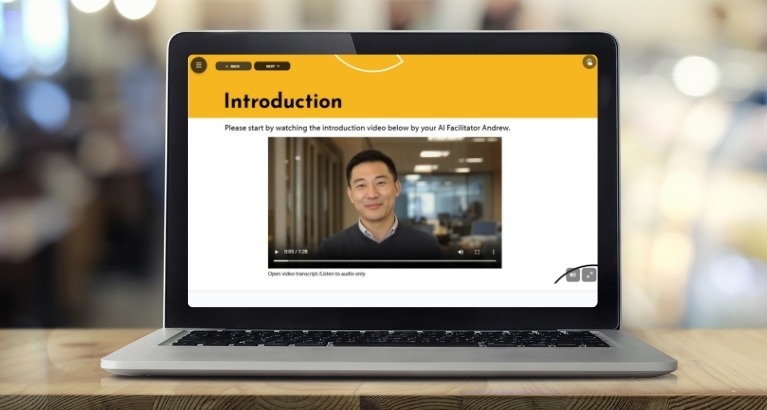

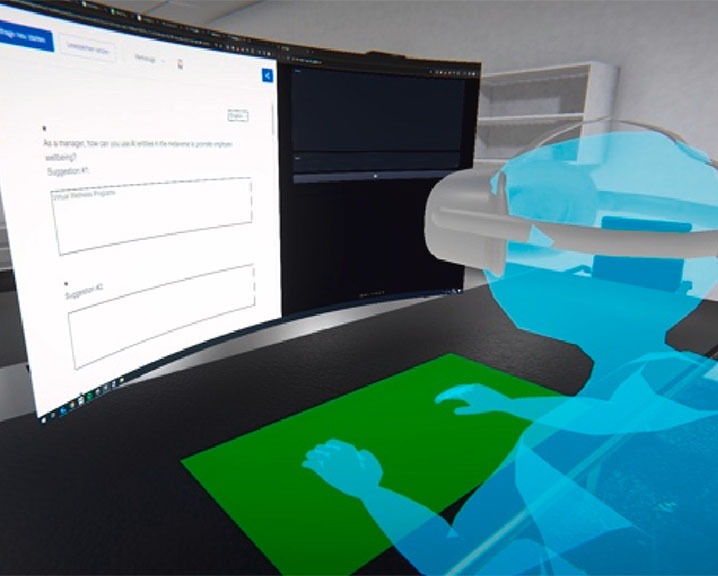

The research by Jochen, involving white-collar workers in Switzerland, looked at employee responses to 3 types of AI agents while participants were immersed in a virtual office environment using a head-mounted display (see images below):

1. Text-based AI agent

A text-based AI agent responding in 25-word-maximum text only.

2. Human-inspired desk robot

A desk robot that had some anthropomorphic characteristics such as communicating by voice and being displayed as a humanoid avatar.

3. Humanlike AI agent

A highly anthropomorphic AI agent with extensive human features such as distinctive facial features, a humanlike physique and wearing human clothes, as well as a name (Johan or Johanna).

Previous research on non-anthropomorphic AI agents has found that they evoke a broad spectrum of emotions among users – including connection, contentment, amusement and frustration.

Yet the research by Jochen found novel variations of these emotions, including surprise and amazement as subsets of amusement, and a curiosity that brought a keen eagerness to engage. “The novelty of interacting with visualised AI agents in a virtual workspace suggests that engaging with Johan/na evokes emotional responses like those experienced when encountering a real person,” said Jochen and his co-authors.

The research also uncovers additional emotions associated with interaction with lifelike AI agents, most notably (on the plus side) relational assurance and (on the negative side) perceived worthlessness.

Positive effects: relational assurance and trust in human-like AI

Relational assurance in this context describes the sense of familiarity and comfort individuals experience in a relationship, particularly when AI provides support that fosters a sense of closeness. The concept of assurance, dating back to a study in the mid-1990s, reflects “an expectation of benign behaviour for reasons other than goodwill of the partner”. The research found that when participants’ interactions with highly anthropomorphic AI agents did not go smoothly (such as when the AI agent did not comprehend the user’s instruction), “tolerance and implicit forgiveness emerged”.

Through interviews with participants, Jochen and his authors found that many workers described feeling more comfortable with the humanlike AI agent, reflecting increased trust and confidence.

When AI feels like a co-worker, not a tool

As described by one interviewee: “Just because you have a face (with the highly anthropomorphic AI agent), it’s maybe a bit more familiar. You think yeah, you can talk to somebody actually… When you have like a face talking to you, you maybe feel a bit more comfortable… When there is a face, you feel more that it’s a co-worker.” As for the less anthropomorphic humanoid avatar, the interviewee said: “it’s more of a tool with the desk robot”.

Said another interviewee, who engaged with highly anthropomorphic IT agent Joanna: “It was the same size like me. It was a feeling of she’s in the same boat… she’s the same height. She’s also working on a computer. It generated the feeling of a colleague.”

The research also found, for some participants, a difference in approach with the humanlike AI agent including an expectation that less precise instructions could nevertheless be understood. Said one interviewee: “With the (desk) robot, I had more the feeling to make the answers short and simple… It looks more like a machine, and I know that to machines you have to give very precise instructions… With Johanna I had the feeling that I could talk like to my colleagues… With humans you expect that they have also the awareness and maybe to interpret it (as) something I want.”

Negative effects of workplace AI agents: perceived worthlessness and loss of autonomy

Perceived worthlessness can be related to scepticism, discomfort, pessimism, regret and self-blaming emotions relating to frustration. The research by Jochen found feelings of lost autonomy and independence when relying heavily on AI agents, particularly those with humanlike characteristics, so managers should take steps to address this issue.

In addition, some participants did not respond well to the humanlike appearance of Johan or Johannes, finding it unsettling or uncomfortable. “For instance, referring to the AI as ‘the woman’ reflected an extreme form of anthropomorphism, suggesting that its visual representation may have unintentionally crossed acceptable boundaries of human-machine interaction. This finding highlights the significant influence of an AI agent’s appearance on user perception, revealing that emotional responses are complex and vary widely based on individual preferences and psychological thresholds.”

The authors suggested that employers seek to mitigate this through clear training and guidelines. “These guidelines should position AI as a collaborative tool but under human direction that emphasises the retention of human skills,” says Jochen. “We also believe that some of these issues, such as those stemming from AI agents’ appearance, can be addressed through modification of such humanlike AI agents at minimal cost.”

We also believe that some of these issues, such as those stemming from AI agents' appearance, can be addressed through modification of such humanlike AI agents at minimal cost.

Matching AI appearance with capabilities to prevent disappointment

One limitation observed in the research is that there can be acute disappointment among users if the AI fails to meet humanlike expectations through a mismatch between an AI’s stunning visual representation (what the research terms ‘excessive anthropomorphism’) and its actual behaviour in terms of speed and accuracy in producing results.

“We saw that if we got AI to be more human, but wasn’t responsive, it would be off-putting to people,” says Jochen, but he cautions not to read too much into this limitation as improvements in AI speed and capability may quickly iron out such mismatches.

AI anthropomorphism goes beyond looks: it shapes behaviour and politeness

While the term anthropomorphism derives from the Greek words for human and form, academic study has also associated anthropomorphism far more broadly to include the attribution of humanlike emotions and characteristics to nonhuman entities.

By comparing employees’ responses to the different types of AI agents, the research by Jochen found that workers’ interactions with Johan/Johanna (the highly human AI agents) tended to be more polite, with workers turning to the AI agent while speaking, greeting the AI agent with a hello or a hand wave, and using expressions like ‘please’ and ‘thank you’ more than with the less-humanlike AI agents. In contrast, most participants with the desk robots tended to stare at the screen instead of turning to the desk robot when speaking, and used more direct commands such as ‘tell me’ or ‘explain’, rarely using ‘please’ and ‘thank you’.

“The implications of this far more polite approach to lifelike AI agents is that it shapes the emotional experience of workers,” says Jochen. “It generates a perception of enhanced communication and collaboration, signals greater trust and social presence and seems to limit the transactional nature of human-AI interactions that are presently commonplace.”

From secretary desks to AI co-workers with opinions – it could get awkward

Few would venture to know for certain how AI will transform the workplace, but Jochen suggests that it could be a full-circle evolution – with a humanlike AI twist – to a very traditional setup in which a manager and an assistant worked very closely in the same office.

“We could be heading toward a situation where we work alongside anthropomorphic AI agents who would be genuine team members, sitting as an AI assistant in one’s own office,” says Jochen. “There is a possibility that could be our future, and this would perhaps mimic the olden days.”

A big difference, however, is that while human assistants may be reluctant to directly challenge the boss, anthropomorphic AI agents with all the answers in an instant would likely be less reluctant to do so. Jochen laughs when thinking about a situation where a humanlike AI agent tells the company chairman, at a meeting of the board of directors, that the chairman’s calculations are faulty – while the other board members valiantly seek to suppress their grins.

“AI has the potential to advance the workplace by leaps and bounds in efficiency, and anthropomorphic AI agents play a big part in this,” says Jochen. “But it could get a bit awkward.”

We could be heading toward a situation where we work alongside anthropomorphic AI agents who would be genuine team members, sitting as an AI assistant in one’s own office.

Humanlike AI may soon sit beside us at work, but we must ensure they are partners and not replacements

As artificial intelligence continues to evolve, the emergence of AI agents that mirror human appearance, voice and behaviour could fundamentally reshape how people experience work. While these systems have the potential to create stronger emotional connections, the same traits that may foster trust can also trigger feelings of dependence and loss of autonomy, and even frustration when performance fails to match appearance.

Thoughtful design and careful management are keys to the future of anthropomorphic AI in the workplace. Businesses that treat these agents as collaborative tools, which support rather than replace human skills, are more likely to see productivity gains without sacrificing employee well-being. The key lies in balancing emotional engagement with clear boundaries, ensuring that AI advances workplace efficiency while keeping people at the heart of the work experience.

Featured research

Anand, P.A., van Zelderen, Wu, S., Koszo, G. and Menges, J.I. (2025) “When AI gets personal: employee emotional responses to anthropomorphic AI agents in a virtual workspace” Computers in Human Behavior

Visualisations

Visualisations reproduced under a Creative Commons Attribution 4.0 International License (CC BY 4.0).

Related content

The emotional experience illustrated here raised additional questions regarding the socio-emotional costs and benefits of AI-human interactions in the workplace. For instance, questions surfaced whether workers still possess psychological ownership over their work, and how AI-facilitated creativity can still feel rewarding. As Jochen Menges and his research team continue these scientific inquiries, they invite other scholars from all over the world to collaborate with them using these cutting-edge technological tools. Interested readers can learn more about their Openverse Open Science Initiative .